Deepseek Chatgpt: Do You Really Need It? This can Allow you to Decide!

페이지 정보

본문

Supports 338 programming languages and 128K context length. Both had vocabulary dimension 102,400 (byte-level BPE) and context size of 4096. They skilled on 2 trillion tokens of English and Chinese textual content obtained by deduplicating the Common Crawl. These weights can then be used for inference, i.e. for prediction on new inputs, for example to generate text. Applications: It may assist in code completion, write code from natural language prompts, debugging, and extra. It creates more inclusive datasets by incorporating content from underrepresented languages and dialects, making certain a more equitable representation. Whether it's enhancing conversations, producing creative content material, or providing detailed analysis, these models really creates a big affect. Learning and Education: LLMs will likely be a fantastic addition to training by providing personalized learning experiences. Personal Assistant: Future LLMs would possibly be capable of handle your schedule, remind you of essential occasions, and even help you make choices by providing useful data. This modern method not solely broadens the variability of training supplies but also tackles privateness issues by minimizing the reliance on actual-world information, which may often include sensitive data. Concerns about AI Coding assistants. What is behind DeepSeek-Coder-V2, making it so special to beat GPT4-Turbo, Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B and Codestral in coding and math?

Supports 338 programming languages and 128K context length. Both had vocabulary dimension 102,400 (byte-level BPE) and context size of 4096. They skilled on 2 trillion tokens of English and Chinese textual content obtained by deduplicating the Common Crawl. These weights can then be used for inference, i.e. for prediction on new inputs, for example to generate text. Applications: It may assist in code completion, write code from natural language prompts, debugging, and extra. It creates more inclusive datasets by incorporating content from underrepresented languages and dialects, making certain a more equitable representation. Whether it's enhancing conversations, producing creative content material, or providing detailed analysis, these models really creates a big affect. Learning and Education: LLMs will likely be a fantastic addition to training by providing personalized learning experiences. Personal Assistant: Future LLMs would possibly be capable of handle your schedule, remind you of essential occasions, and even help you make choices by providing useful data. This modern method not solely broadens the variability of training supplies but also tackles privateness issues by minimizing the reliance on actual-world information, which may often include sensitive data. Concerns about AI Coding assistants. What is behind DeepSeek-Coder-V2, making it so special to beat GPT4-Turbo, Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B and Codestral in coding and math?

Excels in coding and math, beating GPT4-Turbo, Claude3-Opus, Gemini-1.5Pro, Codestral. Hermes-2-Theta-Llama-3-8B excels in a variety of duties. We accomplished a spread of analysis duties to analyze how components like programming language, the number of tokens within the enter, models used calculate the score and the fashions used to produce our AI-written code, would have an effect on the Binoculars scores and ultimately, how well Binoculars was able to distinguish between human and AI-written code. Alibaba Cloud also shared scores that recommend its AI beats OpenAI and Anthropic’s fashions in sure benchmarks. A Content List With Bulk Actions Using Ancient HTML and Modern CSS - Cloud Four Brad Frost Here’s a terrific article by Tyler Sticka about using li:has(:checked) to elegantly fashion issues in a list. Creative Content Generation: Write engaging tales, scripts, or different narrative content. We do not support or condone the illegal or malicious use of VPN companies. AI rules: recommendations on the moral use of synthetic intelligence by the Department of Defense. Why this issues - intelligence is the perfect defense: Research like this each highlights the fragility of LLM technology as well as illustrating how as you scale up LLMs they appear to turn into cognitively capable enough to have their own defenses towards bizarre assaults like this.

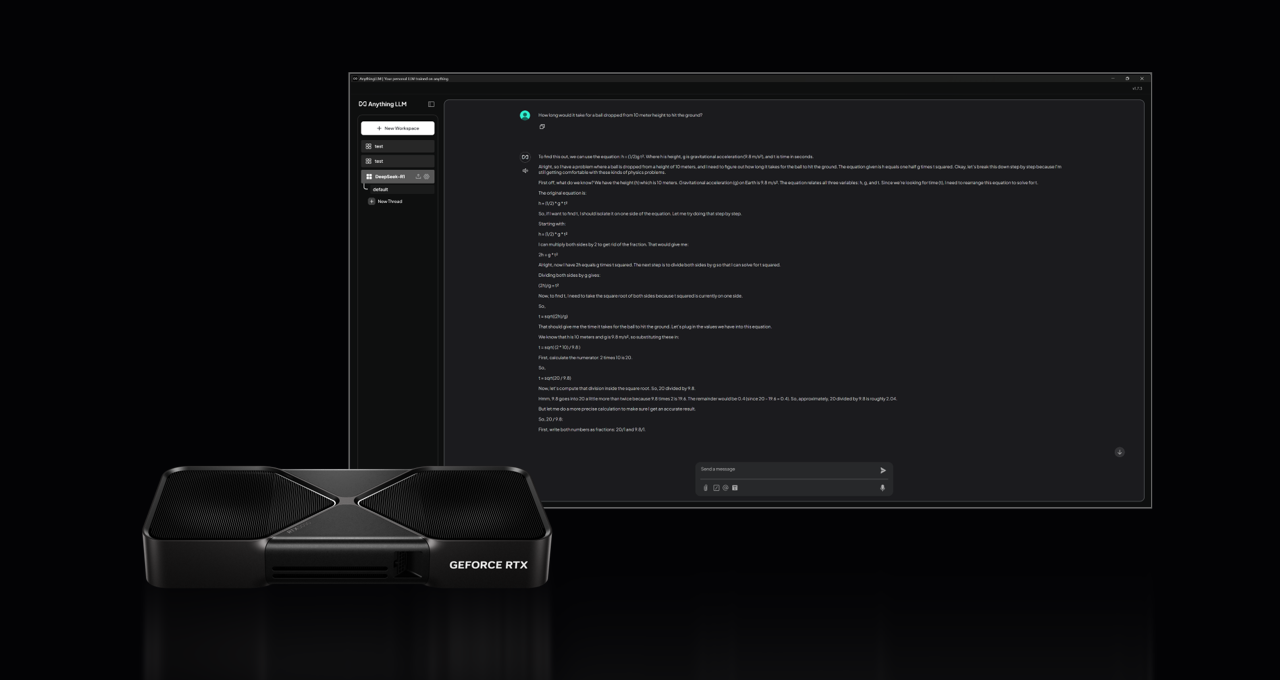

We already see that trend with Tool Calling models, nonetheless when you have seen current Apple WWDC, you possibly can consider usability of LLMs. Recently, Firefunction-v2 - an open weights function calling mannequin has been launched. Task Automation: Automate repetitive tasks with its function calling capabilities. DeepSeek-Coder-V2, an open-supply Mixture-of-Experts (MoE) code language model that achieves performance comparable to GPT4-Turbo in code-specific duties. Every new day, we see a new Large Language Model. Today, they're giant intelligence hoarders. At Portkey, we are helping builders building on LLMs with a blazing-quick AI Gateway that helps with resiliency features like Load balancing, fallbacks, semantic-cache. Smarter Conversations: LLMs getting higher at understanding and responding to human language. As we now have seen all through the blog, it has been actually exciting instances with the launch of these five highly effective language fashions. Hermes-2-Theta-Llama-3-8B is a cutting-edge language model created by Nous Research. Of their analysis paper, DeepSeek’s engineers said they had used about 2,000 Nvidia H800 chips, that are less superior than the most slicing-edge chips, to practice its mannequin. There are increasingly more gamers commoditising intelligence, not just OpenAI, Anthropic, Google.

Read more in our detailed information about AI pair programming. Generating synthetic knowledge is extra useful resource-environment friendly in comparison with conventional training methods. Research organizations similar to NYU, University of Michigan AI labs, Columbia University, Penn State are also associate members of the LF AI & Data Foundation. DeepSeek is built to handle complex, in-depth information searches, making it perfect for professionals in analysis and information analytics. Detailed Analysis: Provide in-depth financial or technical evaluation using structured data inputs. The supercomputer's information center will likely be constructed within the US throughout seven-hundred acres of land. Whether DeepSeek will revolutionize AI development or just serve as a catalyst for further developments in the sector remains to be seen, but the stakes are high, and the world will likely be watching. None of that's to say the AI increase is over, or will take a radically different form going forward. But fast ahead now, the US is doing lots of that. A Blazing Fast AI Gateway.

If you loved this article therefore you would like to collect more info with regards to شات ديب سيك please visit the web page.

- 이전글تحميل واتساب الذهبي من ميديا فاير 25.02.10

- 다음글تنزيل واتساب الذهبي 25.02.10

댓글목록

등록된 댓글이 없습니다.